Researchers Characterize Chemical Structure of HIV Capsid

May 28, 2013

By Allison Proffitt

May 29, 2013 | Today researchers at the University of Illinois at Urbana- Champaign have announced the discovery of the chemical structure of the capsid for the HIV virus. The discovery was facilitated by the Blue Waters supercomputer system at the National Center for Supercomputing Applications (NCSA), and is published this week in Nature.

The first all-atom simulation of the HIV virus—a sample size of 64 million atoms—was led by Klaus Schulten, Physics Professor at the University of Illinois at Urbana-Champaign, and Juan R. Perilla, a postdoctoral researcher at University of Illinois at Urbana-Champaign.

|

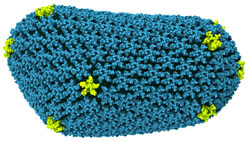

| All-atom model of the HIV-1 capsid (PDBID: 3J3Y). Courtesy of TCBG-UIUC. |

The HIV capsid contains the virus’ genetic material as the virus enters the cell. Once a cell is infected, the capsid bursts open and the virus can begin replicating. The HIV capsid is “the perfect target for fighting the infection,” Schulten said. If one could stop the capsid from releasing the genetic material, the virus would be thwarted.

“The structure of the HIV capsid has been a standing problem for many years, said Perilla. “We’ve known that it exists… but the actual atomic detail of the structure has been unknown and people have been able to solve [only] small parts of the structure.”

Schulten adds: “The problem is the capsid is huge. Today… we know it’s made of three million atoms. It’s one of the largest structures that ever was discovered in living cells… and in order to handle this kind of structure, we needed enormous computing power.”

Schulten and Perilla combined experimental results from crystallography, NMR structure analysis, electronic microscopy with the Blue Waters supercomputer.

“Computing can take the data from different experimental approaches… to find the best agreement structure, atom by atom, with the available experimental data,” said Schulten.

Perilla explains: “What we did was we put all this information together and we were able to come up with a model for the entire structure.”

Having characterized the structure of the HIV capsid opens up a broad range of research options. “The entire structure is a new pharmaceutical target that hasn’t been exploited so far,” explained Perilla. This is particularly exciting in HIV research, because the virus quickly develops tolerance to treatments.

A Bold Plan

Taking on a molecular dynamics problem this large was a daunting undertaking.

“We’re usually very proud if we can do a 1-million atom simulation,” Schulten told Bio-IT World last year (see, "Spectra Logic Tape Storage Beefs Up NCSA Blue Waters Supercomputer"). “Now it’s 60 million atoms… We literally have 20-100 times more data [than a few years ago]!”

A year later, Schulten says that the number of atoms wasn’t the first hurdle. “The hardest part [of the work] was being bold enough to do it,” he said. “When we applied for computing resources, [we were told], ‘Don’t do it; this is way too difficult. You’ll just waste resources.’”

But Irene Qualters, a program director at the National Science Foundation says this is the type of big project for which Blue Waters was built. “It’s really what we had hoped for in boldly investing in Blue Waters… the vision requires bold but sustained investment in people and systems.”

Blue Waters has just started to flex its petaflop muscle. Powered by 3,000 NVIDIA Tesla K20X GPU accelerators, the Cray XK7 supercomputer is capable of sustained performance of one petaflop.

Schulten’s team is one of 30 in different domains using Blue Waters, Qualters said, from a range of disciplines.

The simulations simply couldn’t have been done on a lesser computer, Schulten said. The simulations ran “something over a million node hours,” Perilla said, and used NAMD and VMD software to manage the big data and the large structures. Cray’s parallel computing combined with NVIDIA GPU accelerators ran the simulations ran four times faster on Blue Waters [that standard options], Schulten said. “A huge team worked six years to make this possible,” he stressed.