How To Improve Biopharmaceutical Research Data Utilization?

Contributed Commentary by Chin Fang

January 8, 2020 | Many enterprise network security people intuitively put everything behind a firewall—even though it has been well-established that firewalls are big hindrance to high-speed data flows. I have seen many occasions of frustrated IT professionals puzzled by their inability to move data quickly even though they had been told that high-speed network connections were available. Their security colleagues usually stay quiet unless they have been shown hard and easy-to-reproduce “evidence”. So, how can one come up such “evidence”? The technique is actually well-known in high-end Research & Education (R&E) networking community and is documented in the U.S. DOE Energy Sciences Network’s faster data online knowledge base article “iperf3 at 40Gbps and above”.

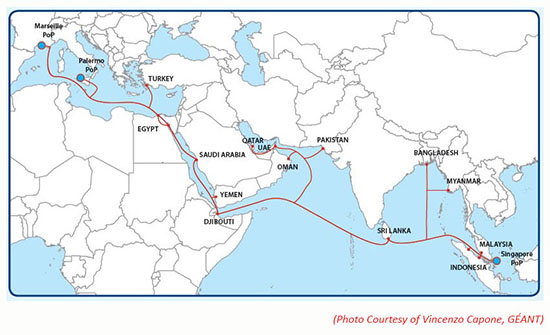

In early November 2019, I witnessed firsthand as networking engineers at two supercomputing centers, Interdisciplinary Centre for Mathematical and Computational Modelling (ICM) – University of Warsaw (Poland) and A*STAR Computational Resource Centre (A*CRC, Singapore) used this simple tool (running 5 instances at each end) to measure the available bandwidth of the new Collaboration Asia Europe-1 (CAE-1) 100Gbps link connecting London and Singapore across 12,375 miles. The figure below shows the undersea cable system used by CAE-1. Note that it has profound social impact: for the first time, many countries in the Mid-East, North Africa, and around the India Ocean now have 100Gbps “digital on-ramps” to the rest of the world!

The iperf3 tool can simply and accurately measure the available bandwidth of a link. Nevertheless, if the measured “apparent bandwidth” is always lower than what it should be (e.g. 20Gbps out of 100Gbps), then simply show the results to these “quiet” network security folks and ask them to do their jobs right! Plus, the management should be informed. It’s important to protect the company’s security, but not at a steep price of slowing down the business progress multiple times!

A few other reasons of poor data mobility and thus low utilization:

- Not knowing storage well but wishing to do transfer fast. The seemingly innocent question, “How fast is your storage system with this kind of data?” almost always yields no answer. A storage system’s throughput always depends on the type of data it serves, the speed and tuning of the Interconnect (e.g. InfiniBand, Ethernet) connecting the storage clients and the system, the state of tune of a storage client and the number of such clients, plus more. It takes a methodological approach to achieve good understanding.

- An unreasonable fascination with virtual machines and containers. Neither is appropriate for heavy I/O driven workloads such as moving data at scale and speed. A rule of thumb: anytime the desired data transfer rate is ≥ 10Gbps, use physical servers dedicated to data movement whenever feasible. Note that the several leading public cloud service providers AWS, Microsoft Azure, and IBM offer physical instances too!

- Attempting to use a single server for both send/receive. This approach is like asking two fast typists to share a single typewriter and to type as fast as usual!

- Ignoring the obvious fact: the world faces exponential growth. Data mover applications must be able to scale-out (aka inherently cluster oriented). But many insist on using 20+ year old incumbent tools! Commonly classified as Managed File Transfer, or MFT tools, coupled with a so-called cluster workload manager (e.g. Slurm) to form a "cluster" (in reality just a bunch of servers). This is despite the fact that in such businesses true cluster applications such as IBM Spectrum Scale (aka GPFS) are often deployed to meet the fast-growing storage need. Why must moving exponentially growing data be carried out with legacy, host-oriented tools?

- AI and ML generating and using Lots of Small Files (aka LOSF)! Seasoned data management professionals know that small files (data object for object storage) always yield poor throughput. This is simply due to laws of physics. Even for reading, the storage must do far more work to create the same data volume. Thus, the throughput is always poor. Nevertheless, AI and ML need to have fast data feeds to GPUs for example. So many AI and ML practitioners are hampering themselves by problems they’ve created!

- Bringing computing power to data or create data lakes. There are serious economic reasons that this is faulty thinking. Storing data in huge volume to form a “lake” implies a large deployment of storage systems or high cost if stored in a public storage cloud. In the former case, other than the high operation costs, when time comes to do technology refresh (3 to 5 years for an on-premises deployment) it’s going to be a tedious and costly IT pain. Furthermore, even with the 20/80 percent rule, huge amounts of data demand copious computing power, which is expensive to acquire, to setup, to operate, and to support. A far better option is to keep data mobility high to avoid accumulating so much as to form a “lake”. The technology and solutions are uncommon but do exist. It is feasible to overcome data gravity.

I hope the above will motivate more people to do better with this foundation of distributed data-intensive sciences and engineering: moving data at scale and speed and boosting their research efficiency.

Chin Fang, Ph.D. is the founder and CEO of Zettar Inc. (Zettar), a Mountain View, California based software startup. Zettar delivers a GA-grade hyperscale data distribution software solution for tackling the world’s exponential data growth. Since 2014, he personally has transferred more than 50PB data over various distances. Chin can be reached at fangchin@zettar.com.